Work Done

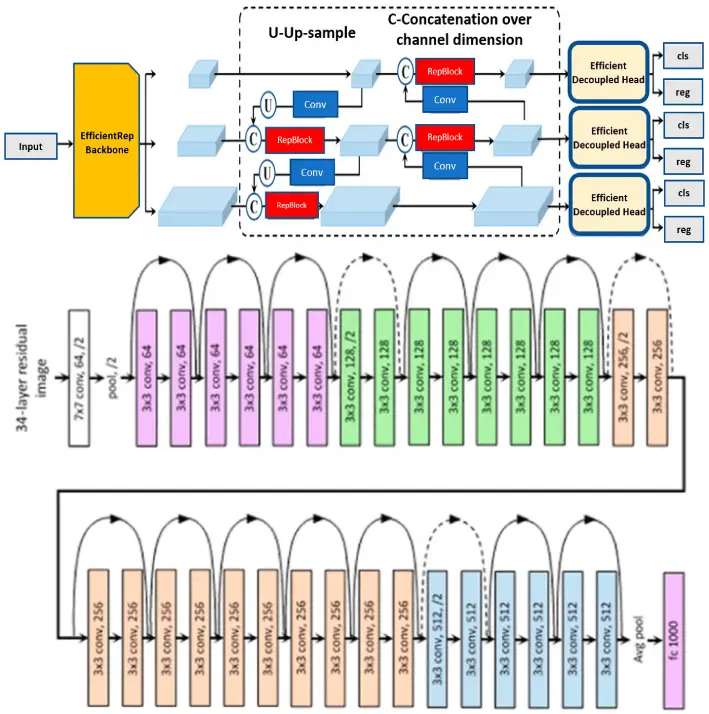

In this project, the YOLOv8 was used for object detection on the Wider Face dataset. The initial steps involved the preparation of dataset loaders specifically tailored for the dataset, splitting into training, validation, testing data. Labels were also arranged in the required format.

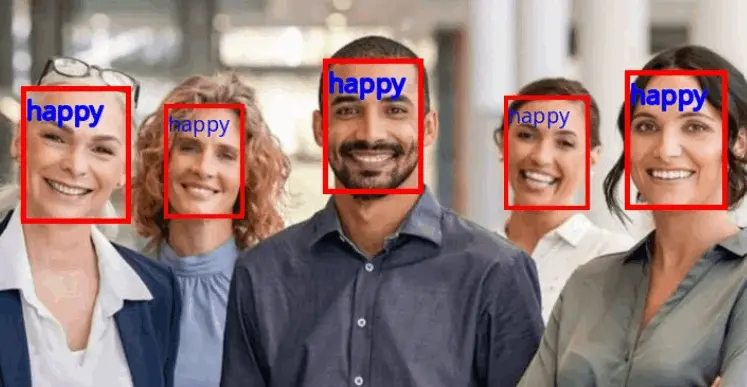

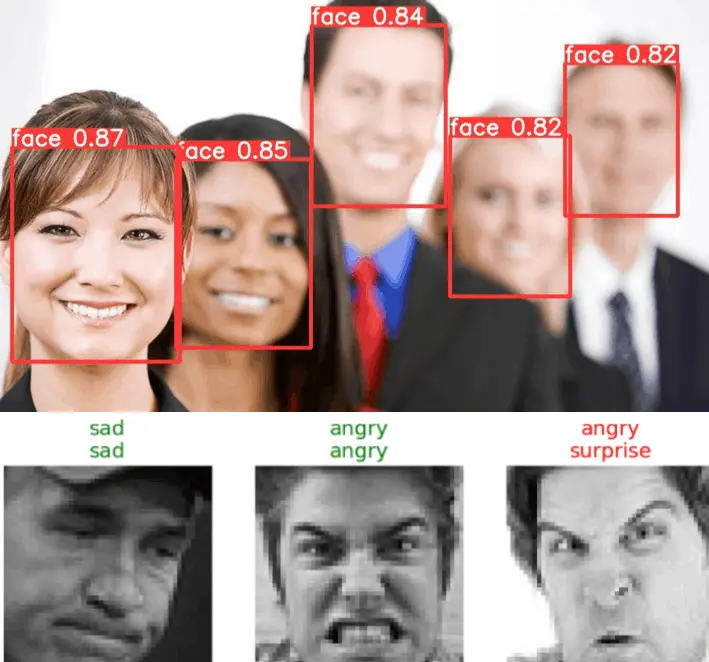

The model was finetuned for 10 epochs and was able to detect faces pretty accurately albeit with lower confidence metrics. A single step architecture could have been used which has different mood faces as its classes. This would have been faster but could have compromised on accuracy.

Then I imported the ResNet34 architecture, to serve as the backbone for the classification model. This was used with a kaggle based facial expression recognition dataset. To facilitate fine-tuning, the last layers of ResNet34 were modified to align the moods - Angry, Surprise, Disgust, Sad, Happy. The process begin with an initial fine-tuning stage where the learning rate was dynamically determined using the "get learning rate" step. The model was fine-tuned for three epochs, with all layers frozen except the last.

Following this, another learning rate determination step was executed, leading to a subsequent fine-tuning phase spanning five epochs in which all layers were unfrozen, leading to a 65% accuracy for the classification task.