Introduction

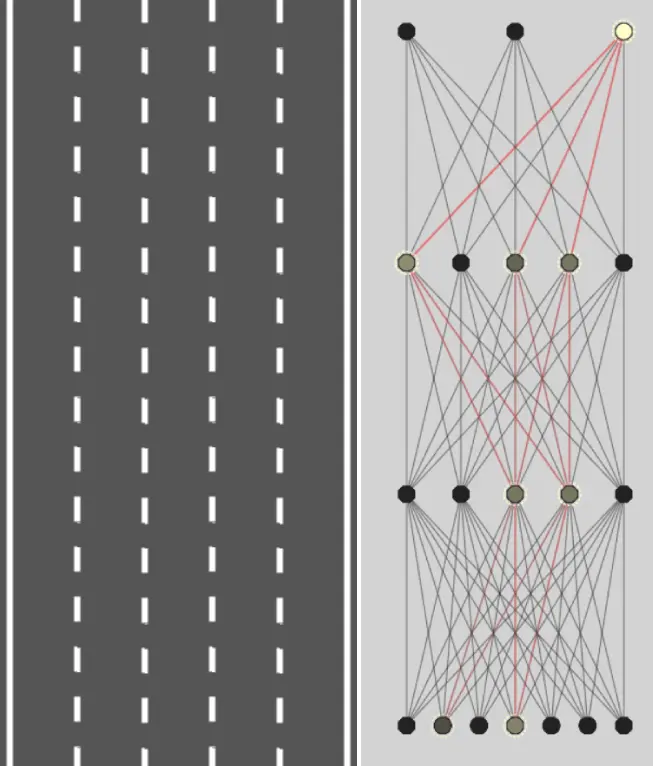

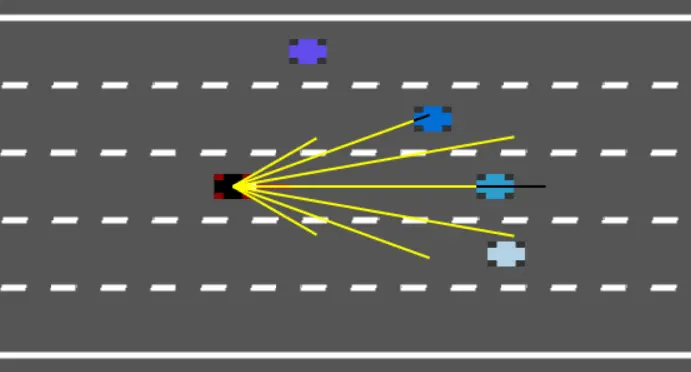

This project was inspired by Radu Mariescu's tutorial series. Autonomous agents employ neural networks to map sensory input to control decisions, a process mastered through reinforcement learning. In the realm of autonomous vehicles, extensive training is imperative. Simulations prove invaluable for accelerating this learning curve. This project focuses on simulating vehicle dynamics, creating a simulator as a preliminary step.

By developing a simulator, a basic neural network is trained to navigate complex traffic scenarios. This methodology streamlines the training process, ensuring that autonomous cars efficiently grasp the intricacies of real-world environments.